bagging predictors. machine learning

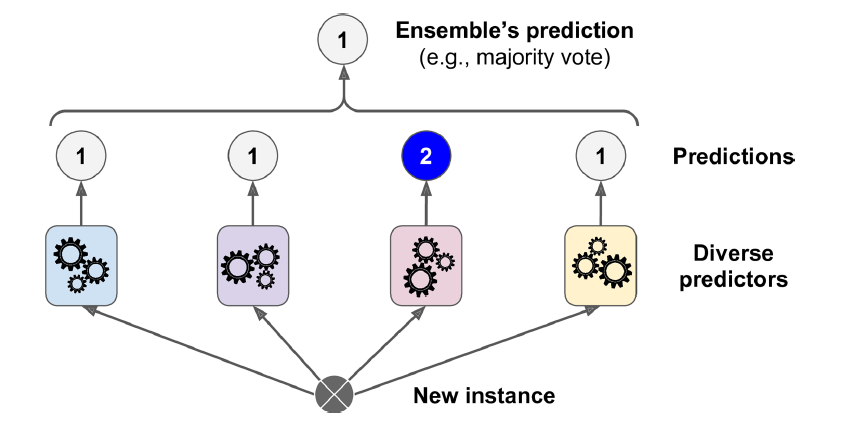

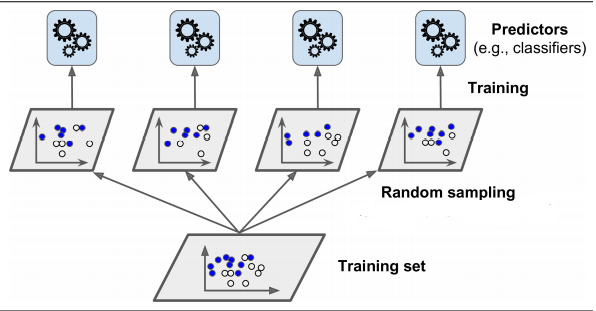

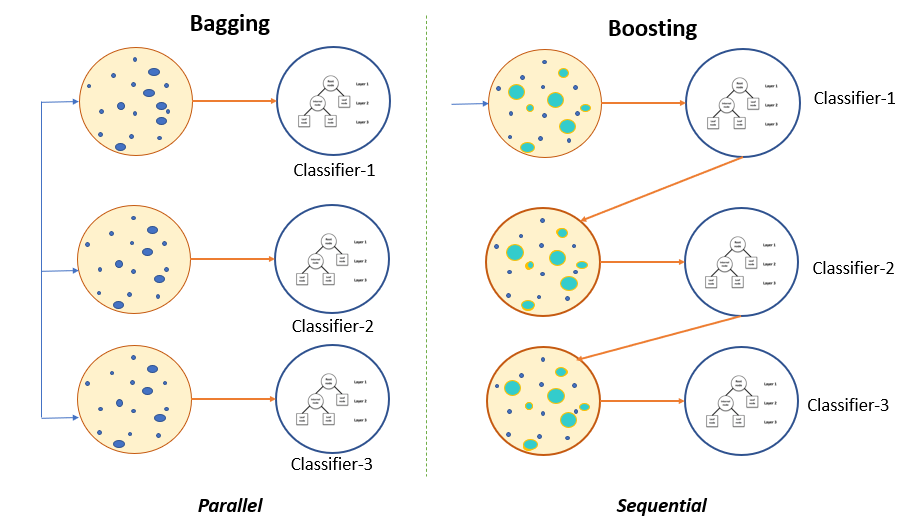

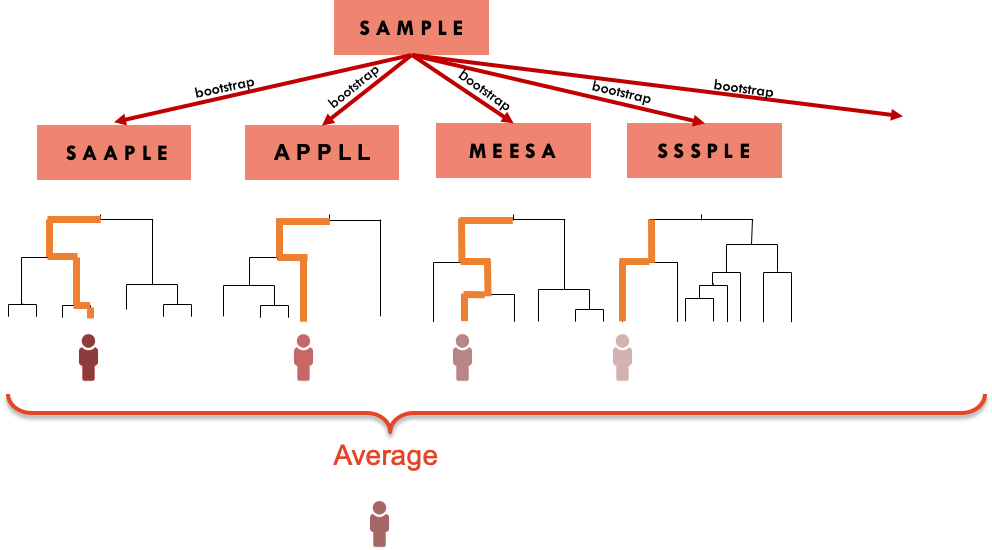

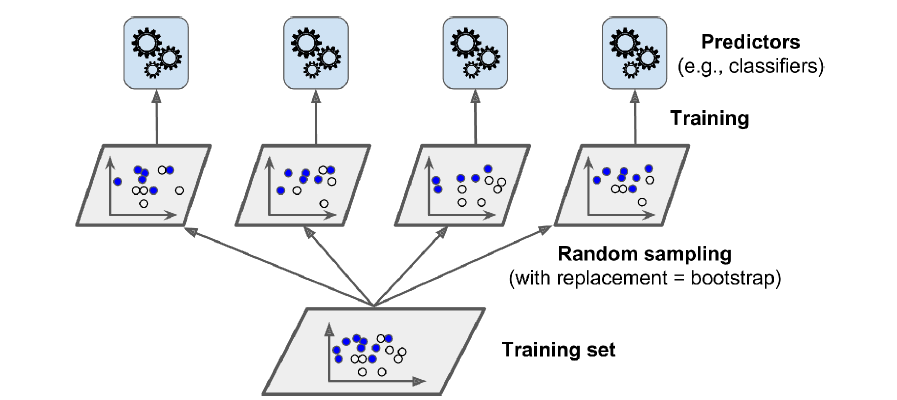

The aggregation averages over the versions when predicting a numerical outcome and does a plurality vote when predicting a class. Visual showing how training instances are sampled for a predictor in bagging ensemble learning.

Ensemble Techniques Part 1 Bagging Pasting By Deeksha Singh Geek Culture Medium

Breiman L Bagging Predictors Machine Learning 24.

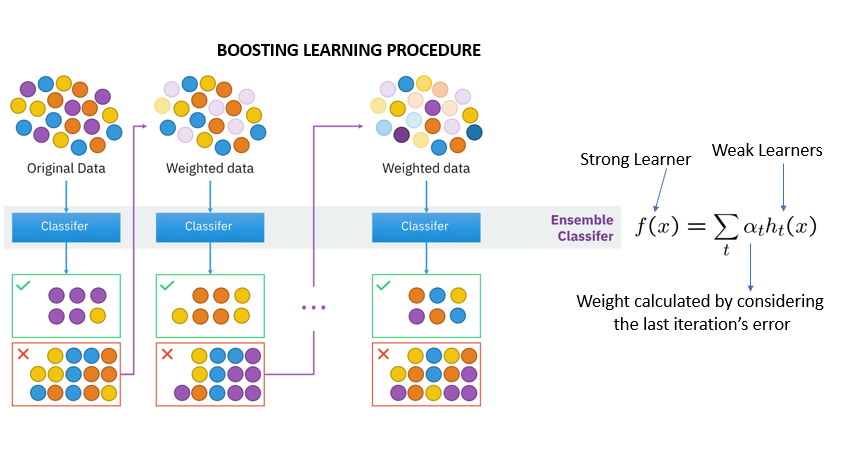

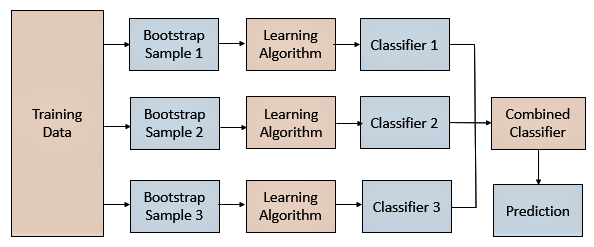

. The process may takea few minutes but once it finishes a file will be downloaded on your browser soplease do not close the new tab. Bagging predictors is a method for generating multiple versions of a predictor and using these to get an aggregated predictor. Ensemble methods like bagging and boosting that combine the decisions of multiple hypotheses are some of the strongest existing machine learning methods.

Bagging aims to improve the accuracy and performance of machine learning algorithms. In this post you discovered the Bagging ensemble machine learning. Blue blue red blue and red we would take the most frequent class and predict blue.

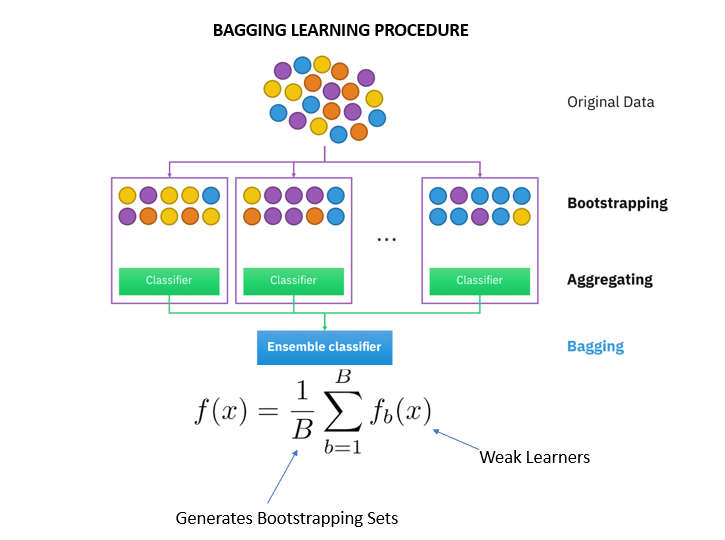

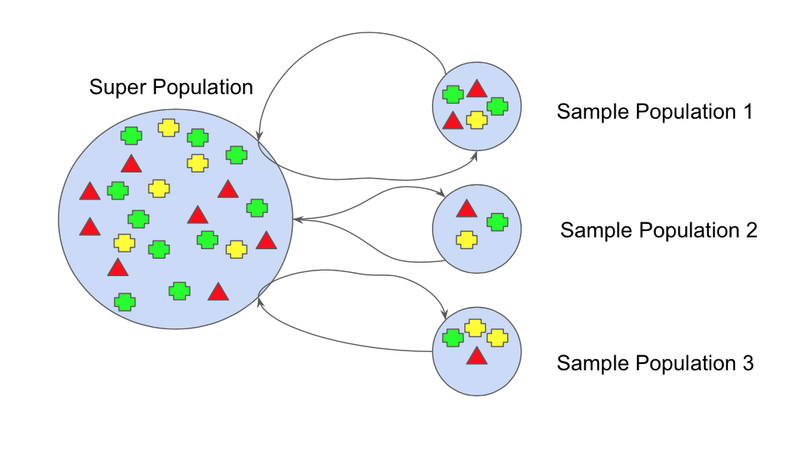

Bootstrap aggregating also called bagging from bootstrap aggregating is a machine learning ensemble meta-algorithm designed to improve the stability and accuracy of machine learning algorithms used in statistical classification and regressionIt also reduces variance and helps to avoid overfittingAlthough it is usually applied to decision tree methods it can be used with any. A relationship exists between the. For example if we had 5 bagged decision trees that made the following class predictions for a in input sample.

Improving nonparametric regression methods by bagging and boosting. Bagging can be used with any machine learning algorithm but its particularly useful for decision trees because they inherently have high. After several data samples are generated these.

In the above example training set has 7. Brown-bagging Granny Smith apples on trees stops codling moth damage. This paper presents a new Abstract - Add to MetaCart.

Applying a bagging ensemble machine learning approach to predict functional outcome of schizophrenia with clinical symptoms and cognitive functions Eugene Lin123Chieh-Hsin Lin345and Hsien-Yuan Lane3678 Eugene Lin 1Department of Biostatistics University of Washington Seattle WA 98195 USA. The aggregation averages over the versions when predicting a numerical outcome and does a plurality vote when predicting a class. Bagging predictors is a method for generating multiple versions of a predictor and using these to get an.

Computational Statistics and Data Analysis. Bagging predictors is a method for generating multiple versions of a predictor and using these to get an aggregated predictor. Machine Learning 24 123140 1996 c 1996 Kluwer Academic Publishers Boston.

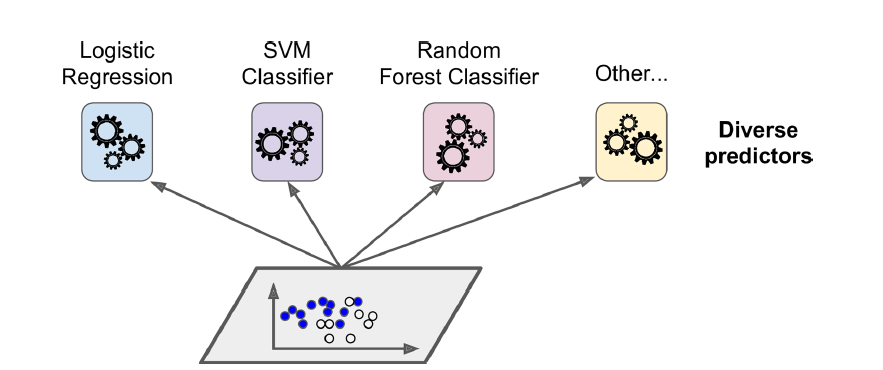

This research aims to assess and compare performance of single and ensemble classifiers of Support Vector Machine SVM and Classification Tree CT by using simulation data. The simulation data is based on three. Almost all statistical prediction and learning problems encounter a bias-variance tradeoff.

Cited by 11 259year BREIMAN L 1996. In this blog we will explore the Bagging algorithm and a computational more efficient variant thereof Subagging. Given a new dataset calculate the average prediction from each model.

Manufactured in The Netherlands. The results show that the research method of clustering before prediction can improve prediction accuracy. Important customer groups can also be determined based on customer behavior and temporal data.

Statistics Department University of California Berkeley CA 94720 Editor. Bagging also known as bootstrap aggregation is the ensemble learning method that is commonly used to reduce variance within a noisy dataset. Methods such as Decision Trees can be prone to overfitting on the training set which can lead to wrong predictions on new data.

Customer churn prediction was carried out using AdaBoost classification and BP neural network techniques. Has been cited by the following article. In machine learning we have a set of input variables x that are used to determine an output variable y.

By clicking downloada new tab will open to start the export process. With minor modifications these algorithms are also known as Random Forest and are widely applied here at STATWORX in industry and academia. When the relationship between a set of predictor variables and a response variable is linear we can use methods like multiple linear regression to model the relationship between the variables.

In bagging a random sample of data in a training set is selected with replacementmeaning that the individual data points can be chosen more than once. The diversity of the members of an ensemble is known to be an important factor in determining its generalization error. Bootstrap Aggregation bagging is a ensembling method that attempts to resolve overfitting for classification or regression problems.

Ensemble Learning Basics And Overview By Hiteshwar Singh Geek Culture Medium

Ensemble Methods In Machine Learning Bagging Versus Boosting Pluralsight

Bagging Machine Learning Through Visuals 1 What Is Bagging Ensemble Learning By Amey Naik Machine Learning Through Visuals Medium

Ensemble Methods In Machine Learning Bagging Versus Boosting Pluralsight

Ensemble Methods In Machine Learning Bagging Versus Boosting Pluralsight

Ensemble Methods In Machine Learning Bagging Versus Boosting Pluralsight

Ensemble Techniques Part 1 Bagging Pasting By Deeksha Singh Geek Culture Medium

Ensemble Methods In Machine Learning Bagging Versus Boosting Pluralsight

2 Bagging Machine Learning For Biostatistics

Bootstrap Aggregating By Wikipedia

Bagging Bootstrap Aggregation Overview How It Works Advantages

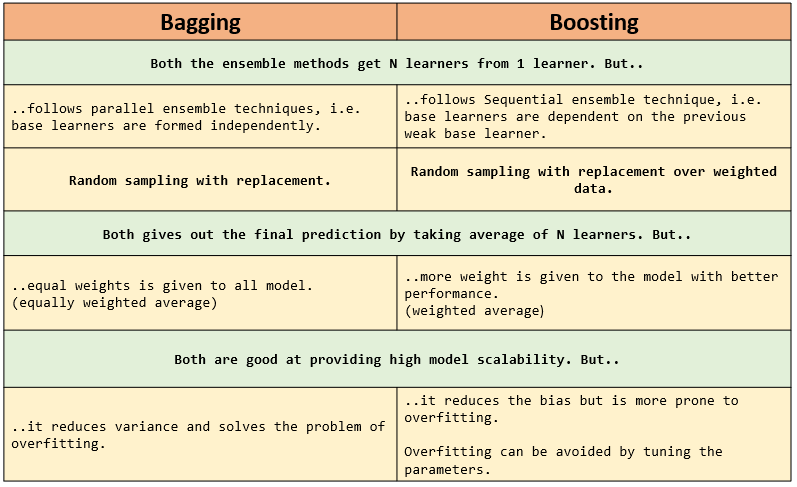

Bagging Vs Boosting In Machine Learning Geeksforgeeks

Ensemble Techniques Part 1 Bagging Pasting By Deeksha Singh Geek Culture Medium

Bagging Vs Boosting In Machine Learning Geeksforgeeks

Bagging Machine Learning Through Visuals 1 What Is Bagging Ensemble Learning By Amey Naik Machine Learning Through Visuals Medium

Data Mining And Machine Learning Predictive Techniques Ensemble Methods Boosting Bagging Random Forest Decision Trees And Regression Trees By Cesar Perez Lopez Ebook Scribd